Uncanny AI

Exploring speculative futures of legible AI

“Dave, I can’t put my finger on it, but I sense something strange about him.”

Frank Poole from 2001: A Space Odyssey (1968)

Premise

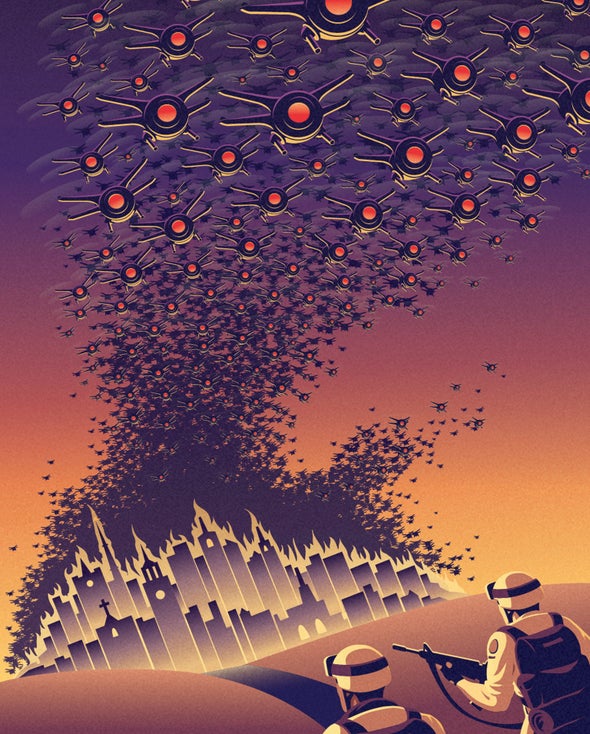

We’ve all been there feeding the definitional dualism of AI with Terminator’s and HAL 9000 nightmares. But why is that so? Why is it that when we think of future AI we imagine killer robots bent on destroying us rather than possibilities of nanotechnology able to cure disease? Why is it that we fear our smart assistant’s are spying on us more than assisting us? The answer might lie in the fact that there just isn’t enough information conveyed about what really goes on behind the scenes with our devices. The Uncanny AI Project︎︎︎︎ stemmed from this notion of making the future of HCI legible.Challenges

- How does one represent AI enabled devices and services in a manner that they are more readable/acceptable to a wider human audience?

- How does one navigate years of definitional dualism around AI and technological futures?

Methodology

- Research through Design

- Speculative Design

- Human Centred Design

- Graphic Design & Semiotics

Research & Contribution

I acted as a Research Associate along side Prof Paul Coulton︎︎︎, Dr Joseph Lindley︎︎︎, Franziska Pilling︎︎︎, and Dr Adrian Gradinar︎︎︎ as part of the Uncanny AI Project attempting to tackle gaps in AI legibility by presenting design as a critical actant in imagining futures of transparent AI’s.Major Contributions

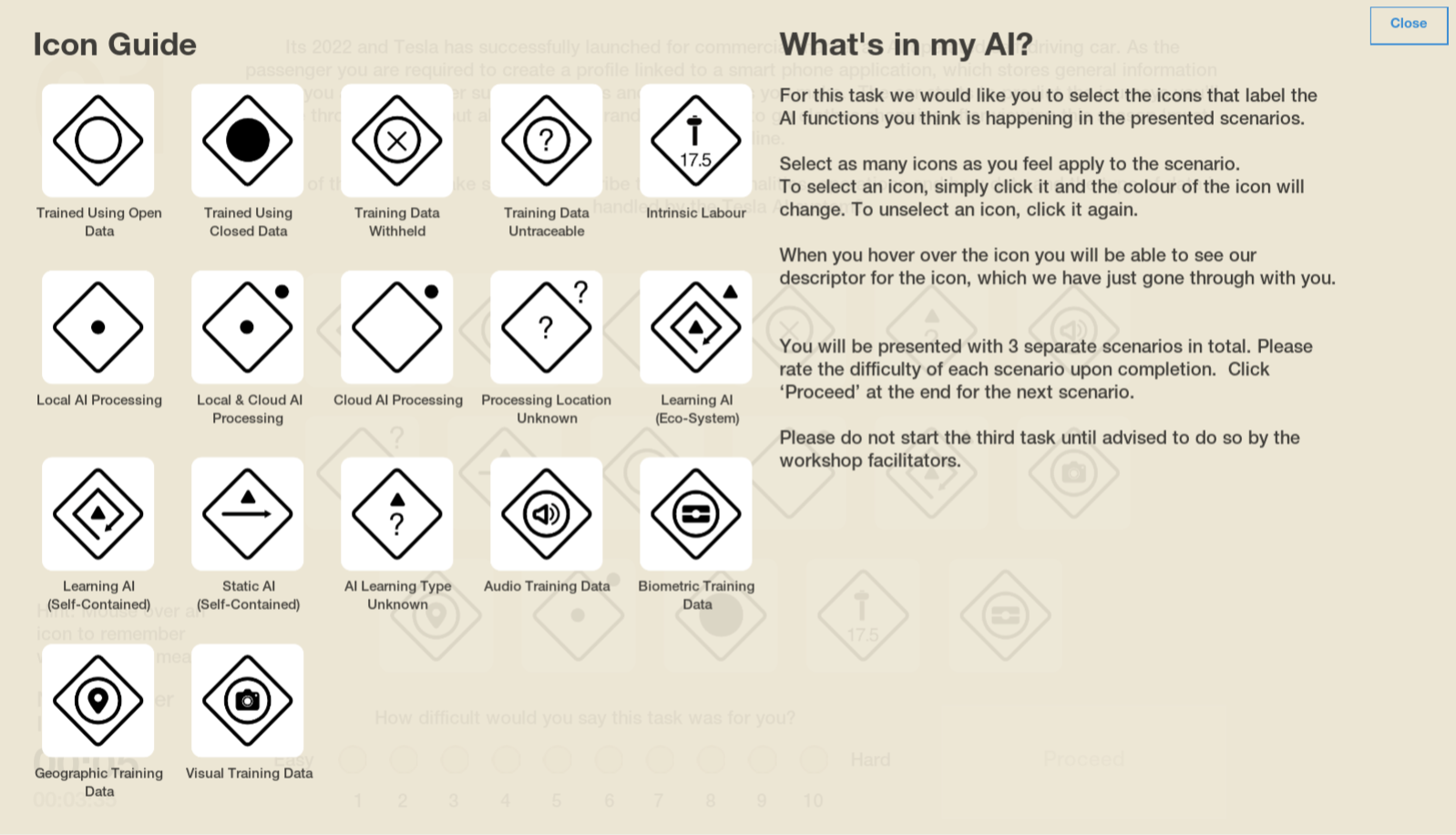

- Designing iconography for exploring legibility in AI.

- Crafting a ludic workshop for testing and co-designing legibility through iconography.

- Appropriating physical workshops to a unique ludic experience due to COVID-19 enforced limitations.

- Designing diegetic prototypes and fictions around legible AI futures.

Approaching the definitional dualism

The main focus of this project has been navigating common misconceptions around AI and how to best mitigate concerns rising from its associated definitional dualism. The reality is that killer robots don’t really exist (yet), though there is no shortage of popular media prophesising the rise of machines feeding into the growing rhetoric that AI is out to get you. The networkification1 associated with AI systems has brought about challenges with technologies such as IoT and ML as AI powered devices and objects rise exponentially, all connected together interacting in ways that are for the most part unknown to the average user. Many are not aware of what their smart devices do behind their backs. Factors like this affect the greater understanding of adoptability for technologies like IoT.2

The irony is that progression in technology requires taking steps towards sentience, yet each step forward suffers this definitional dualism. It’s not that we require robots that can think like humans, rather that is the only logical step presently understood for AI to take.

Artificial Intelligence might not be out to get us yet, but there is no lack of sentiment in the general understanding of AI that it is indeed doing that. So how do you work around this definitional dualism?

This research thus took two approaches, one was to understand how AI can be made to appear less frightening and the other to see what this future of a more acceptable AI would look like. To approach this the one thing that stood out from all studies into AI and definitional dualism was a lack of understanding or legibility towards AI. Surely if users are aware of what AI is doing in their midst they might have a better chance of accepting it among them? Therefore, how does one make technology easily readable?

Reading AI

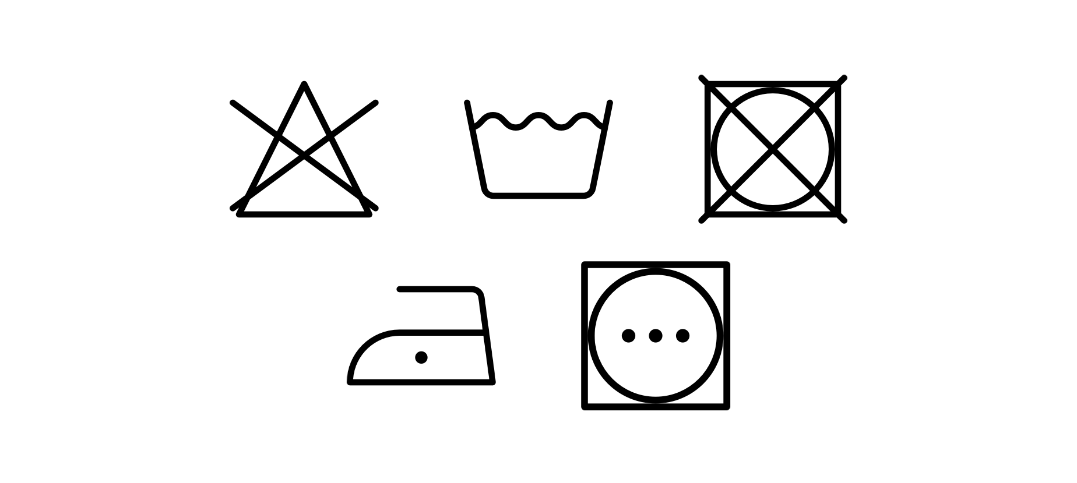

Laundry icons. A sublime language of pictograms associated with all clothing and apparel. These laundry specific hieroglyphs have become part of the mundanity of life as we know it. Ironically, though they are seen everywhere many are not aware of what they often symbolise.Take for instance the symbol for bleach (︎), it’s no coincidence that it shares in the alchemy symbol for fire which subsequently also has semiotic roots in why danger and hazardous chemicals are often depicted in a triangle. Yet in many ways the pictograms allow for a legibility of laundry. For this research the laundry icons became a starting point to establish a like iconography for AI. The icons themselves were designed over iterations in an RtD methodology. The intention became for users to at a glance or perhaps through a mobile application understand an AI’s intentions (or those of its maker) and/or implications.

Iconography imagined came from prior understandings and practices used in systems architecture and engineering drawings. The thought was that since AI is closely related to these fields the iconography could adopt like symbology.

Co-designing Icons for AI

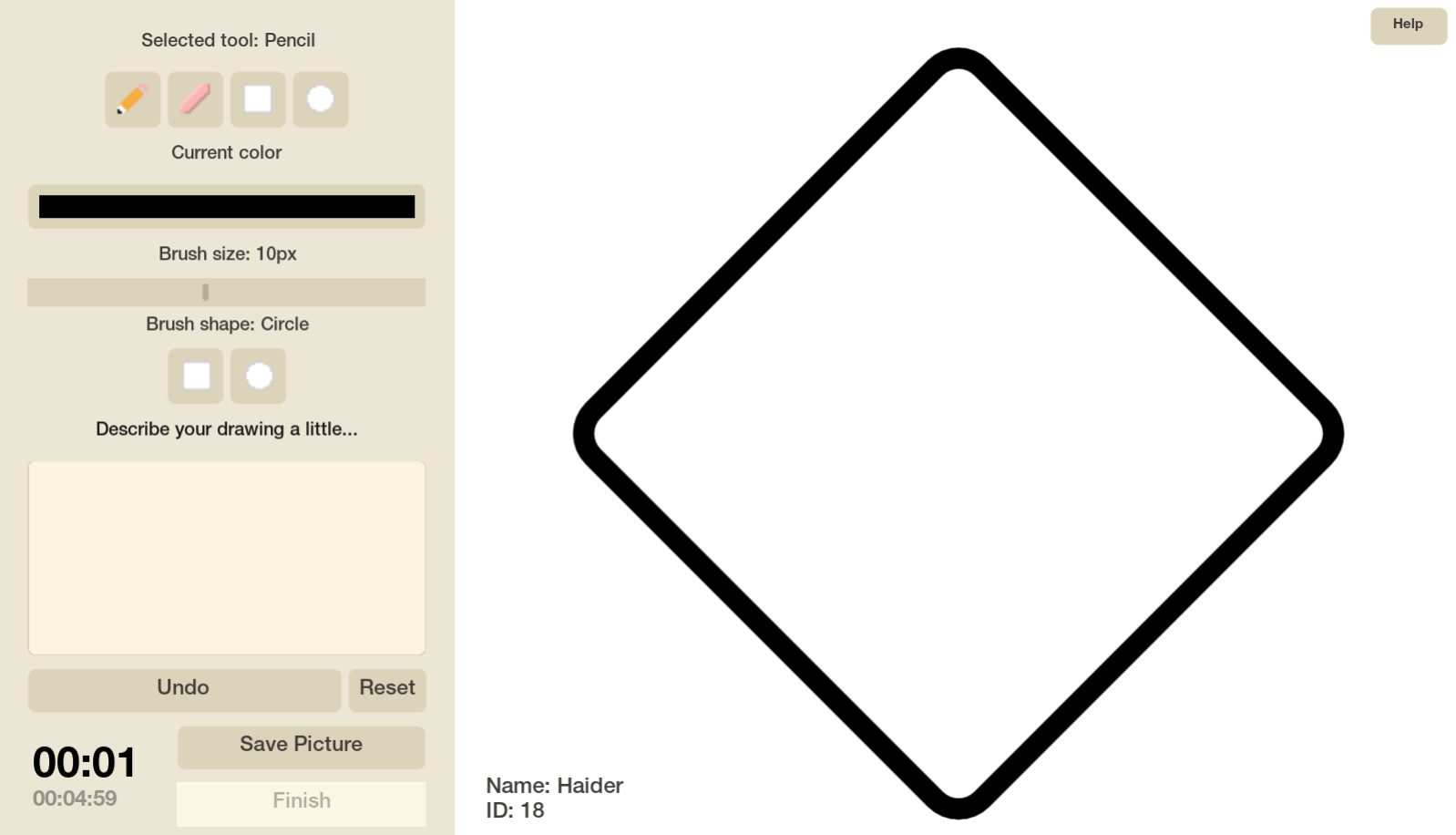

As part of the iterative process the icons were evaluated through participatory sessions and facilitated workshops. Initially imagined as a deck of cards that players interacted with, these workshops were intended to disrupt understandings of what these speculative icons could be along with meaning associations with AI.

Unfortunately the physical workshops were drastically hindered by the COVID-19 Pandemic, but that didn’t mean the workshops could not continue in another format. The entire workshop was reimagined as a digital ludic experience programmed in Godot︎︎︎ and Python︎︎︎. Shifting to a digital platform brought a broader audience for the workshops as well as a variety of exercises designed and developed over the different iterations. The idea was for participants to experience the icons and present their own understandings of what AI legibility could be represented as.

World Building

The second portion of this project explored the speculative world where these icons would exist with many of these understandings coming from the workshops. A series of design fictions were imagined that worked with the city of Lancaster as a test bed. Within these settings a regulatory body the International Organization for AI Legibility or IOAIL maintains a standard for all AI empowered services and products to display their functions clearly to end-users.The premise of these fictions was that service providers and manufacturers would need to acquire certification from IOAIL akin to current standardized certifications and be awarded along a band of legibility; the higher the band the more legible the system. The fictions further explored implications and scenarios where government bodies, institutions, manufacturers etc. would utilize these icons often within draconian settings.

Fictional certification body imagined with a traffic light system for AI legibility.

Fictional certification body imagined with a traffic light system for AI legibility.

Responsible AI

An extension of this project is currently exploring the possibility of responsible AI measures through licensing methods. A collaboration with the RAIL︎︎︎ project and team members from MIT and IBM. In its current stage this project is exploring a variety of means towards providing licensing for AI that may be represented as ethical and responsibly aware of its actions. Some approaches explored include story-based methods and HCI.

Researcher(s)

Dr Joseph Lindley

Haider Ali Akmal

Franziska Pilling

Prof Paul Coulton

Dr Adrian Gradinar

Afiliated Project(s)

Uncanny AI︎︎︎

PETRAS IoT Hub︎︎︎

Dr Joseph Lindley

Haider Ali Akmal

Franziska Pilling

Prof Paul Coulton

Dr Adrian Gradinar

Afiliated Project(s)

Uncanny AI︎︎︎

PETRAS IoT Hub︎︎︎

Research presented at

CHI 2020, Honolulu

Alt.CHI 2020, Honolulu

DRS2020 Synergy, Brisbane

Indeterminacy 2020, Dundee

Swiss Design Network

In Journal Publication

IJFMA 2021

CHI 2020, Honolulu

Alt.CHI 2020, Honolulu

DRS2020 Synergy, Brisbane

Indeterminacy 2020, Dundee

Swiss Design Network

In Journal Publication

IJFMA 2021

︎

- Pierce, James, and Carl DiSalvo. ‘Dark Clouds, Io&#!+, and 🔮[Crystal Ball Emoji]: Projecting Network Anxieties with Alternative Design Metaphors.’ In Proceedings of the 2017 Conference on Designing Interactive Systems - DIS ’17, 1383–93. Edinburgh, United Kingdom: ACM Press, 2017. https://doi.org/10.1145/3064663.3064795.︎︎︎

- Lindley, Joseph, Paul Coulton, and Miriam Sturdee. ‘Implications for Adoption.’ In Proceedings of the 2017 CHI Conference, 265–77. New York, New York, USA: ACM Press, 2017. https://doi.org/10.1145/3025453.3025742.︎︎︎